This website contains affiliate links. We will earn a small commission on purchases made through these links. Some of the links used in these articles will direct you to Amazon. As an Amazon Associate, I earn from qualifying purchases.

As cameras and sensors explore computational photography, we’ll see sensor technology change in exciting new ways to support what’s now possible with faster, real-time processing.

One such technology is the Quad Bayer Sensor that Sony has developed and can already be found in the Mavic Air II. They have also developed a 48MP full-frame version, which we now see in the Sony A7S III and the Sony FX3.

The Quad Bayer sensor is a pretty cool advancement in sensor technology, and it has the potential to be a true game-changer for our hybrid photo/video systems.

What Is A Quad Bayer Sensor?

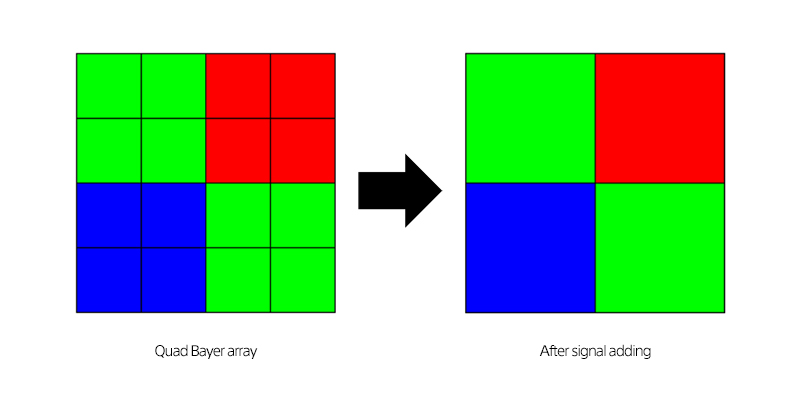

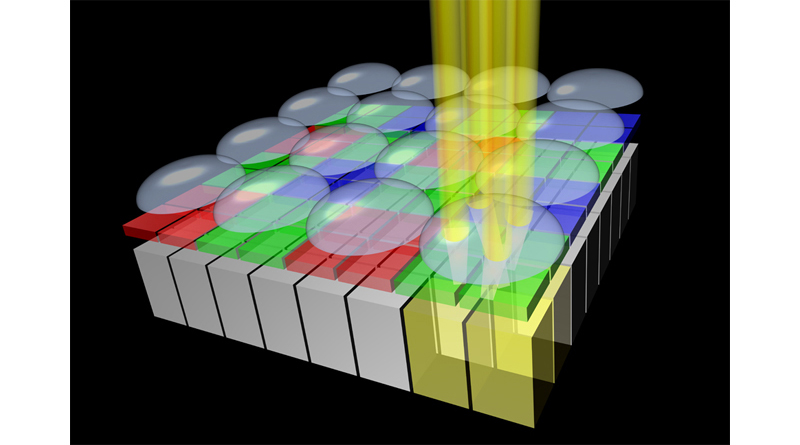

It’s a sensor with each photosite divided into four without offsetting the RGB filters.

This means that each color section can function in two ways.

- As a low-megapixel sensor, the quad sensor sites act as a single pixel to perform better when photon coherency is much lower, such as in low-light situations. This improves sensitivity and efficiency.

- With a high-megapixel sensor, each photosite pulls its color data, which is useful in high-photon-coherency situations like direct sunlight, where photon coherency is high.

Applications For Photography

The Quad Bayer sensor is not necessarily that useful for still photography, where there is no reason not to always capture with the higher resolution and then scale the image for better low-light performance.

This was a common misconception about the A7S II vs. the A7R II. People would assume the A7sII was better in low light for stills; however, when scaling the image from an A7rII, the results were the same.

Generally, if the processing power is good enough to scale in-camera and on the fly, there is not really much downside to using higher-resolution sensors in low light.

We even see cameras like the Leica M11 that let you select different resolutions by binning pixels, which actually improves dynamic range.

Applications For Videography

The Quad Bayer sensor is technically a high-resolution, high-pixel-pitch sensor, but with intelligent analog-to-digital conversion, it can play two different roles.

- Superior 4k low-light performance

- A high-pixel pitch sensor that can produce 8k images

For video, a quad Bayer sensor can be very useful because it lets you adjust your resolution based on your lighting conditions. If you are in full bright light, you could capture 8k by using every single photosite as its own, or in low light; you can combine each color quad section for better low-light shooting.

Why Do You Need Bigger Photosites For Low Light?

You need larger photosites for low light because, as light decreases, the density of photons decreases as well. A larger photosite allows the surface to capture a signal from a larger area, maintaining an acceptable voltage.

In daylight, there are 11,400 photons in a cubic millimeter. Energy from the sun is 3.10 electron volts, with the most energetic light being purple. The orange light is 2.06eV. If you decrease the coherence of the photons in low light, you need a much bigger surface to capture the same amount of energy since the density of photons has decreased.

I’m not about to do the math on photon coherency and ISO, but I think you understand. Cut the light by 1/64, and you’ll need a much larger surface area to absorb the same energy. Light has a finite resolution in the form of how much energy it can create when interacting with electrons.

This is why there is little point in buying a high-megapixel camera if you mostly shoot in low light with fast shutters. The coherency and energy of the available light still limit you. More pixels in a smaller configuration are less efficient at capturing this energy. Having a Quad Bayer sensor gives you the best of both worlds, as long as no strange artifacts come from having a 2×2 configuration for each color pixel array.

I’ve been trying to figure out for some time what Sony could do that would be game-changing for the A7sIII. I think this is it.

The Potentials Of Quad Bayer Sensor In A Future Camera Like The Sony A7sIII

The whole life and soul of the A7sII was low-light performance. A Quad Bayer sensor will allow the camera to maintain its amazing 4 K low-light performance by combining the quad configuration data into a single pixel. Still, it would also allow the camera to produce an 8k image in well-lit situations by using the 1×1-pixel data.

What else is cool about shooting 8k is that you can shoot at a lower subsampling, like 4:2:0, and increase it in post-production by scaling down. So 8k is not useless. You can always pull a better image by scaling down a larger one. We see cameras like the Canon R5 and the Nikon Z8 doing this, pulling 8 K data for their 4 K video modes.

A Quad Bayer sensor would make the Sony A7sIII an incredibly versatile tool with insane capabilities unmatched by the competition.

Update: The Sony A7sIII is a quad-Bayer sensor derived from a 48MP sensor. The camera takes all that information and combines it to produce amazing video results, but unfortunately, you can never shoot in 48MP mode; it’s locked to 4K only.

14 comments

Dear Alik,

Ref to your interest in the article “I would actually be curious to see a comparison between a 48MP Quad Bayer vs a standard 48MP Bayer and see if they produce the same results at the high resolution, or if the quad array creates artifacts within the interpolation.”

I am an amateur photography enthusiast. On comparing JPEG from Huawei P30 pro, Xiaomi mi9T which use 40MP and 48 MP quad bayer tech against output in Full res from Nokia 808 (38MP) and Lumia 1020 (41MP) , I have observed the below

1. Foliage from quad bayer is smeared like water color

2. Pixel level clarity is higher in Bayer Filter (on pixel peeping)

3. Raw images in full resolution from quad bayer are inferior to those from Bayer Filter

..if the above is of interest to you.

Thanks Daaz. I kind of suspected something like that.

Even the Fujifilm X-Trans sensor has some disadvantages with their noise patterns because of the quad green pixel configuration.

It’s looking like the A7sIII is going to be a quad bayer too.

At that level with those smartphones and the small pixel pitch, I wonder if diffraction is more of an issue with the quad bayer sensors.

Read my explanation above… I think “smeared like watercolor” is an excellent explanation of what “rematrixing” is doing. It’s moving samples around in space.

Alik, confirming what Daaz says below, using the individual pixels in a 48 MP quad Bayer sensor will not produce images equivalent in sharpness to a regular 48 MP Bayer sensor because the different colored sub-pixels are further apart from each other than they are in a regular Bayer sensor. You can actually end up with images that don’t look as good when using it in “normal” (e.g. 48 MP) mode because you don’t get the dynamic range benefit of using the sensor in HDR mode where half of the sensor is taking a short exposure and half is taking a long exposure and they are combined.

Thanks for the comment.

Check this out. You can download some samples from the Mavic Air II which uses a 48MP quad bayer sensor. https://dronexl.co/2020/04/28/dji-mavic-air-2-dng-raw-jpg-and-hdr-free-file-download/ Tiny sensor of course.

It looks like it’s having some of the same funky grain pattern issues Fujifilm has with their X-Trans sensor which uses a quad green pixel configuration. Things get wormy. But it’s not terrible all things considered with the Mavic. Would be curious to see a larger sensor with better lenses doing it.

It does seem like the 100% crop has an almost upscaled feel to it so your assessment is looking to be correct.

It will be interesting to see if indeed Sony does use one of these sensors in the A7SIII (or whatever it might be called) how they actually use it and what options will be available in terms of using the different modes of the sensor. The thing is that with the quad Bayer sensor in the normal mode you can sometimes get images that do look somewhat sharper than when using it in the pixel-binned or HDR modes but it depends a lot on the content in the image. However, certainly at least when pixel peeping, it’s never going to look like a regular Bayer sensor image at the same resolution. I think with phones using these quad Bayer sensors, most of the time the final output is some tiny social media image so the artifacts are not so obvious. I think even a lot of consumer drone stuff (drones with built-in cameras) ends up on YouTube where it is more often 1080p.

If they make it for video, I could see them doing a lot of processing on it to clean it up too. When things are in motion with motion blur it’s harder to see those little artifacts as well.

Camera companies tend to understand how light works and images are processed. I don’t expect to see any actual Quad Bayer sensors in real cameras. But there’s another use for this.

The Olympus OM-1, OM Systems OM-1 Mark II, and OM Systems OM-3 all use a stacked sensor (Sony IMX472) that’s nominally 20 “megapixels,” but actually contains 80 million photodiodes. These photodiodes are organized in 2×2 groups under 20 million color filter array elements, so it’s sounding a bit like quad Bayer. The difference is that there are only 20 million microlenses, so each 2×2 groups is basically four samples of the same light, not four samples of four spatially different things. Using that, it’s just like Canon’s Dual-Pixel sensors, the four samples can be used for phase detect autofocus. A quad Bayer would have a separate microlens for each photodiode, and they could not be used for autofocus in that way. I believe the Panasonic GH5S sensor, the IMX299, has a similar structure, but Panasonic never used it for quad pixel autofocus (it may not have full photodiode readout).

Hi again Alik. I want to ask you about your comment in this article that: “ This was a common misconception with the A7sII vs the A7rII for example. People would assume the A7sII was better in low light for stills, however, if you scaled the image from an A7rII, the results were the same.” I am not sure this is true in all cases. I have lots of other Sony full-frame cameras but have not had the A7SII but I think especially for higher ISOs, there’s noticeably less noise, especially chroma noise in the shadows, between these two cameras even after scaling the higher resolution image down. Now whether this is all easily fixable in post to get an equivalent-looking image is also another factor.

You are right, it’s not true in all cases. Really depends on the technology behind the sensor and the way it’s configured.

When I say this I’m talking more about pulling detail. If you scale that 42MP sensor down most of the noise gets reduced and the detail is matched. it could still have a layer of light grain though, but very fine and the detail should be still very close.

Now I personally prefer the less megapixel camera in low light for stills at higher ISO because it produces cleaner images out of camera, so it’s less work in post. But even with the A7rIII, it should match detail no problem, maybe even better because there is no low pass filter, there will just be more noise that ends up being very small once scaled. Hope that makes sense. It’s not exactly apples to apples though because they tune those sensors for slightly different things and the higher res sensors will generate more heat which can add to the noise and they have slower readout speeds typically.

But ultimately, if in low light you only have a photon coherency of 800 photons per cubic mm, it doesn’t matter what camera you try to capture that with, you’re only going to be able to pull in the resolution of 800 photons per cubic mm. So it’s good to pick the right camera for the situation.

You ever notice if you expose your ISO at 1600 in daylight with just a super high aperture it looks way better than 1600 in actual low light? That’s because there is still 11,200 photons per cubic mm hitting that sensor in the daylight, which still improves the signal to noise ration even at ISO1600.

Same goes the other way, ISO 100 in low light still won’t look as good as ISO100 in daylight.

You are right, it’s not true in all cases. Really depends on the technology behind the sensor and the way it’s configured.

When I say this I’m talking more about pulling detail. If you scale that 42MP sensor down most of the noise gets reduced and the detail is matched. it could still have a layer of light grain though, but very fine and the detail should be still very close.

Now I personally prefer the less megapixel camera in low light for stills at higher ISO because it produces cleaner images out of camera, so it’s less work in post. But even with the A7rIII, it should match detail no problem, maybe even better because there is no low pass filter, there will just be more noise that ends up being very small once scaled. Hope that makes sense. It’s not exactly apples to apples though because they tune those sensors for slightly different things and the higher res sensors will generate more heat which can add to the noise and they have slower readout speeds typically.

But ultimately, if in low light you only have a photon coherency of 800 photons per cubic mm, it doesn’t matter what camera you try to capture that with, you’re only going to be able to pull in the resolution of 800 photons per cubic mm. So it’s good to pick the right camera for the situation.

You ever notice if you expose your ISO at 1600 in daylight with just a super high aperture it looks way better than 1600 in actual low light? That’s because there is still 11,200 photons per cubic mm hitting that sensor in the daylight, which still improves the signal to noise ration even at ISO1600.

Same goes the other way, ISO 100 in low light still won’t look as good as ISO100 in daylight.

Alik, I appreciate your detailed reply and agree with you on all fronts. I also prefer to do less work in post and the reason I shoot with big apertures, despite often shooting on a 60 MP sensor, is that I am not really interested in spending a lot of time in NR. I also agree that the look in low light is very different. The reality is that even if you the ability to take a pretty clean image in low light that is exposed to make it look really brighter than it is, the image often just looks very flat. It’s often much more interesting to go low key and embrace blacks and shadows. A lot of people get overly excited with shadow recovery and sure it’s exciting to see what you can do with the sensor but often they are just introducing noise and losing so much of the interesting contrast.

Yeah, there seems like there is a set resolution light will give you. I also think this is a micro-contrast thing too. In lenses more elements have more surfaces which degrades some of the light coherency. But often with low micro-contrast lenses you can get it back some good pop when using strobes because you’re blasting it with a ton of extra light.

There isn’t a lot of science on this. Michel van Biezen covers some photon stuff on his youtube channel which is pretty cool.

I disagree — quad Bayer, at least for “high resolution” is a marketing trick, not much more.

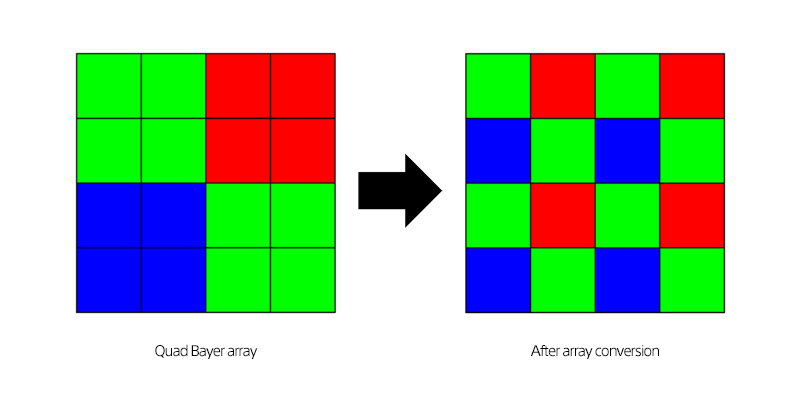

Here’s the problem. So you capture a raw image at say 48 million photodiodes, 12 million color filters, as you would with a quad Bayer sensor. If you want to make a 12 megapixel image, great. Every photodiode sample in your raw image is part of that 2×2 cell, so merge them in some way: average for lower noise, add them for higher sensitivity, or stagger exposure for increased dynamic range. The run a normal Bayer De-matrix operation to generate your RGB pixels.

But what about a 48 megapixel image.. how so you get RGB samples for each pixel from the sampled raw data? You basically can’t, because there’s nothing to interpolate. If you got to nearest neighbors on a sample by sample basis, you’ll find that you generate identical or near identical pixels, since every R sample only has one B neighbor, etc.

What they do is worse: they reorder the quad Bayer capture by spatially moving pixels around to generate a Bayer pattern at 48 million samples… but by doing that, they destroy the spatial integrity of the samples. So you create 48 million pixels, but you’re doing that at a much lower effective resolution, and worse, adding spatial distortions.